Performance troubleshooting. A struggle of all customers since the beginning of the ServiceNow® platform life cycle.

The customers optimize and run specific development projects related to fine-tuning customized reports, features, and potential hard jobs concerning the platform's performance, availability, and sustainability.

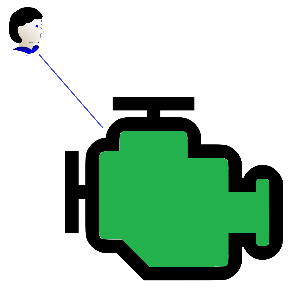

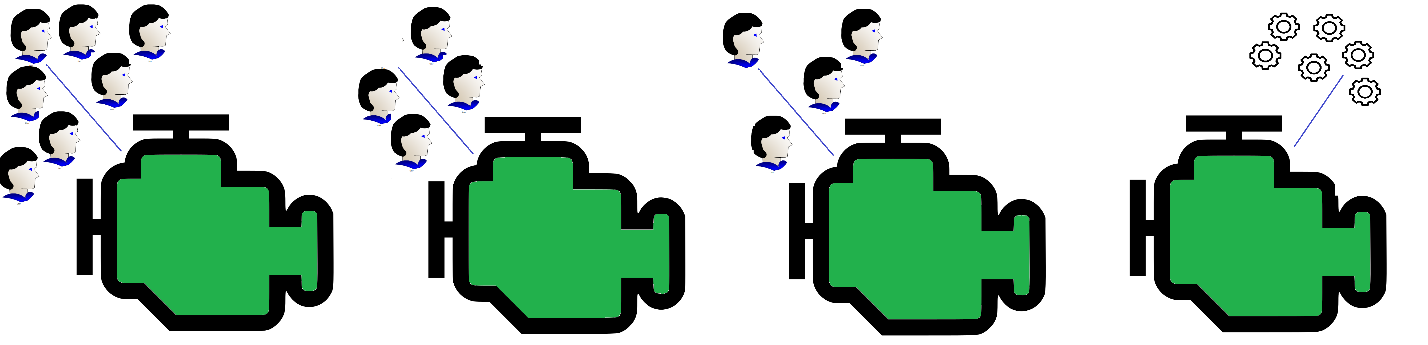

When one node is not able to handle all requirements

Most cases come from the application itself:

- being built and executed by two or more application nodes responsible for the complete processing of end-user transactions,

- background jobs such as on-demand reports,

- scheduled jobs,

- and even internal jobs executed by default platform nature.

In some testing or own development environment, we can efficiently run just one single application node and run a complete platform only on that application node.

The complexity grows when we connect more and more end-users and implement a number of customizations into the platform, and one application node is not able to handle all platform requirements or even respond to a single user.

Availability of instances

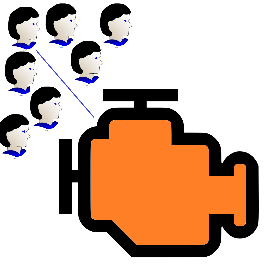

ServiceNow® platform provides a number of features to reserve resources and keep the availability of instances visible. For example, a limited number of threads (semaphores) are available for running concurrent end-user requests. When the instance is a bit overloaded, others are parked into the limited queue, and those are waiting for free semaphores to proceed with their requests.

At the same time, several other requirements and transactions are running simultaneously, maybe with some different/higher priority and not related to the end-user transactions.

For this purpose, now we have API-INT, SOAP, and other semaphores available. In parallel, user transactions are not waiting for integration transactions and vice versa. Therefore, it seems these types of transactions are not affected by each other.

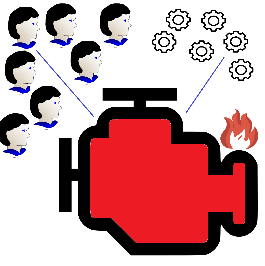

Is your node healthy… or NOT?

If we take into account that there are several additional threads running in the background and taking care of the background job processing, application node could be a bit overloaded by parallel activities even if the resources seem to be still available to connect, request, and run, to execute…

Remember: There is only ONE engine inside!

Regardless of how many threads, semaphores or external resources are defined – there is only a single core running in the center of each application node with limited memory reservation and processing capacity.

The performance of your instance and each application node connected to it and providing services to the instance depends on the number of concurrent background processes running, responses to integration transactions, and, ultimately, responses to end-user requests. All of this together makes the state of all or part of your instance (application node) usable, responsible and healthy enough... or NOT.

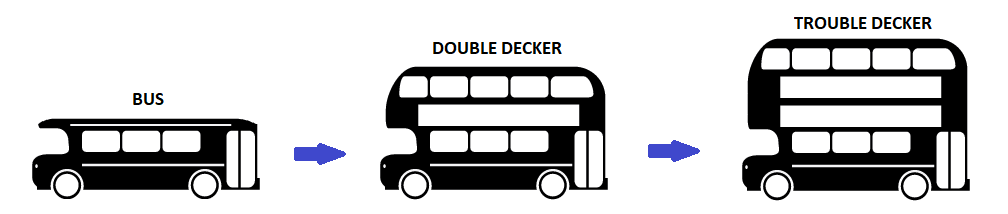

Explaining the application node: the case of a “trouble decker”

The application node has been described as a central engine with limited resources providing ServiceNow® instance services. Let's visualize it in a simple example: public transportation.

A single bus can transfer a number of passengers.

✔️ That is fine. The bus is running quite well, and the passengers are happy.

By extending the bus with an additional floor, we get more space for passengers.

❓ But the bus becomes more unstable, and the speed of the journey will be not as fast as with the original bus. Additionally, even comfort during transportation is slightly reduced as we need to place stairs in the middle of the bus.

A double-decker bus is still quite ok. More capacity while we need to compromise a little on transport speed and comfort.

For sure we could still add one, or even several additional decks on top of our double-decker. Will this multi-decker bring any added value?

❌ Well – this “trouble-decker” would certainly add more trouble. A volatile and dangerous vehicle with a totally overloaded engine running inside, and discomfort for passengers.

That is precisely the description of overloaded application nodes. Too many floors, too many transactions, too many backgrounds and scheduled jobs executed with only one central node engine/processor.

Don't create a trouble decker: balance load by defining separate pools

To relieve application node resources and keep the node response at an acceptable level at least for the end-user, there are a couple of tasks to be done regardless of whether your instance is running inside your data center as a self-hosted instance or under ServiceNow® hosted cloud-based environment.

When we expect that the instance should be responding quite fast to the end-users and there should be visible quite good end-user experience, we have to separate different types of transactions.

Fortunately, we can define separate pools of application nodes dedicated to specific usage and utilization by particular load balancing.

Load balancing approach and pools:

- UI nodes

- Worker nodes

- Integration nodes

- Maintenance pool

UI nodes for the end-user experience

UI node pool to be limited only to provide end-user requests. Background job processing and scheduled jobs execution should be disabled.

Only system based - platform core - jobs are to be executed there.

UI nodes are used only by end-users, scheduled jobs, reports are not executed there, and heavy integrations are not using them.

This pool of application nodes is taken care of only to provide a good end-user experience. Nothing more.

Worker nodes for background jobs processing

A pool of worker nodes does not provide any resource to the end-users, and there is no way for end-users even to access the pool of worker nodes.

On the load balancer side, accessibility should be defined only by a private URL to access worker nodes for maintenance purposes and those shouldn't be publicly accessible. These nodes are selected to run all scheduled jobs and are not used by any end-user.

Worker nodes are defined to put all effort into the background and scheduled jobs processing.

Integration nodes for machine-based transactions

The set of application nodes dedicated only to the integration purposes is good to consider when you plan, develop or design integration with an expected heavy and continuous load.

It is recommended to use separate application nodes and a specific load balancer set for this type of integration or integration if the number of integrations is enormous.

Integration nodes are used only by automation, integration, monitoring and similar machine-based transactions. Not by end-users, and scheduled jobs are not executed there.

Maintenance pool

While every previously mentioned pool of application nodes is accessible via load balancer separately, let's define an additional private pool of nodes to access any application node within a single instance for maintenance purposes. There is no limitation, and the maintenance team person can access any instance application node regardless of its purpose.

Pooling the nodes improves user satisfaction

No user enjoys being impacted by a scheduled job running somewhere in the background, consuming several node resources. Some users might have no idea this is happening.

At the same time, background jobs could be impacted by wrongly defined end-user queries, long-running home pages or too many users concurrently working with their ServiceNow® instance node.

What is a viable solution to this problem? Dividing nodes into pools. The purpose of dividing nodes into the pools and changing their role within your ServiceNow® instance is to keep enough resources for a specific transaction type and avoid impacting node performance by several transactional types.

Thank you for your time,

Radim

Senior ServiceNow Architect